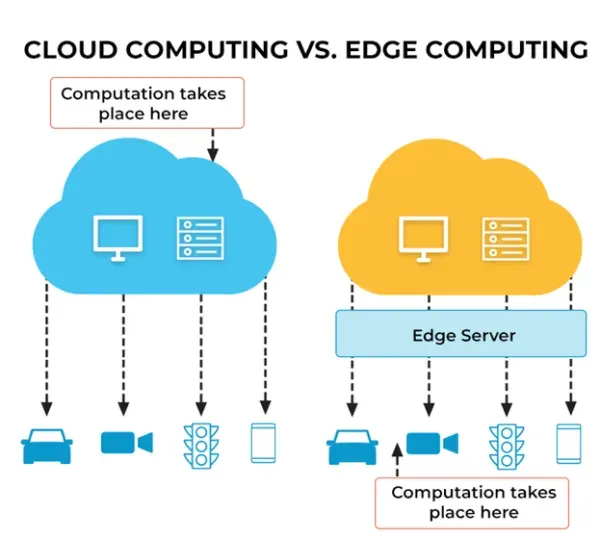

Cloud to Edge computing is redefining how modern apps deliver instant, reliable experiences at the speed of user interactions. By pushing compute, storage, and intelligence closer to users, edge computing reduces round-trips, enabling low latency applications across mobile and IoT. This cloud-to-edge continuum blends centralized cloud power with distributed edge resources, guided by edge network architecture that prioritizes responsiveness and resilience. Organizations gain benefits such as improved performance, better data residency, and the ability to support real-time analytics at the network edge—often described as cloud to edge computing benefits. Adopting a hybrid cloud and edge approach, teams design architectures that scale, secure data, and optimize bandwidth for diverse workloads.

Think of an edge-first paradigm where computing moves closer to users, blending centralized cloud power with local processing nodes. This approach, often described as edge-to-cloud integration, emphasizes near-edge intelligence and real-time decision making for responsive experiences. Leveraging MEC, edge-native architectures, and distributed orchestration, teams deploy services at or near the network edge while staying coordinated with the cloud. Such distribution supports latency-sensitive workloads, offline operation, and privacy-conscious workflows, echoing the goals of cloud-to-edge strategies. In practice, organizations build a blended hybrid cloud and edge ecosystem that optimizes where compute happens to meet performance, resilience, and governance needs.

Cloud to Edge Computing: Accelerating Apps with a Hybrid Cloud and Edge Strategy

The cloud to edge computing model moves compute, storage, and intelligence from centralized data centers closer to users and devices. This proximity unlocks a set of cloud to edge computing benefits that translate into faster responses, more reliable experiences, and better support for bandwidth-constrained scenarios. For latency-sensitive experiences like real-time collaboration, immersive AR/VR, and interactive gaming, reducing round-trip time is not just a performance tweak—it’s a differentiator that keeps users engaged and productive. By blending cloud-scale analytics with edge-side responsiveness, organizations can deliver outcomes with greater immediacy while maintaining governance and visibility across the full stack.

To capitalize on these advantages, teams design hybrid cloud and edge environments that intelligently allocate workloads based on latency, data sensitivity, and network conditions. Edge-native platforms—ranging from MEC to micro data centers and lightweight Kubernetes—enable consistent deployment and management of microservices and AI workloads at the edge. Meanwhile, the cloud handles long-running analytics, global orchestration, and centralized governance. This balance supports low latency applications while preserving the capacity for deep learning, model training, and widespread data coordination in the central cloud.

Edge Network Architecture: Designing for Low Latency and Resilience

Edge network architecture defines the layered, distributed system that makes edge computing scalable and reliable. At the edge, MEC nodes and compact data centers host services close to users, dramatically reducing latency and enabling context-aware processing. The central cloud retains responsibility for heavy lifting—data aggregation, global policy enforcement, and large-scale storage—while the edge handles real-time decisioning and offline-capable logic. A well-planned edge network architecture ensures consistent service discovery, security, and observability across many sites, enabling organizations to extend their reach without compromising performance.

Implementing this pattern requires careful orchestration of edge platforms, data flows, and governance. Data orchestration and selective synchronization help balance data residency requirements with the benefits of centralized analytics. Security and identity solutions at the edge—zero-trust access, encryption, and continuous monitoring—must be integrated into the architecture from day one. By embracing edge caching, event-driven processing, and distributed data stores, organizations can support hybrid cloud and edge deployments that meet the needs of low latency applications while preserving a unified, scalable operational model.

Frequently Asked Questions

What is Cloud to Edge computing and how does it enable low-latency applications through edge network architecture?

Cloud to Edge computing is a design pattern that blends centralized cloud resources with distributed edge resources. By executing latency-sensitive processing at the network edge—near users and devices—the edge network architecture minimizes round-trips to the cloud, delivering near-instant responses for low-latency applications. The cloud handles heavy analytics and governance, while the edge handles real-time data processing, offline capability, and local decision-making. This approach improves user experience, resilience, and bandwidth efficiency, while helping meet data residency and privacy requirements.

Which architectural patterns best support Cloud to Edge computing and how do they relate to edge network architecture and hybrid cloud and edge deployments?

Key patterns include: 1) Cloud-to-edge continuum for distributing workloads between the cloud and edge; 2) Hybrid cloud and edge deployments that span regional edge sites and cloud regions for data sovereignty and latency reduction; 3) Distributed data stores and caching to keep data locally at the edge with controlled cloud synchronization; 4) Event-driven architectures at the edge using lightweight buses to trigger functions on real-time signals; 5) Edge mesh and service discovery to maintain reliability and observability across many edge sites. These patterns support a robust edge network architecture, enable scalable management, and align with a cloud to edge strategy. Start with a focused pilot, emphasize security, governance, and observability, and scale gradually.

| Section | Key Points |

|---|---|

| What is Cloud to Edge computing? | – Design pattern combining centralized cloud resources with distributed edge resources. The cloud handles heavy lifting (analytics, batch processing, model training, global orchestration, governance); the edge handles latency-sensitive tasks (real-time data processing, UI responsiveness, local decision making, data residency constraints). The result is a hybrid continuum where workloads are selectively moved to the edge or back to the cloud based on latency, bandwidth, privacy, and availability. |

| Key technologies powering Cloud to Edge computing | – Edge computing and MEC (Multi-access Edge Computing): Edge nodes bring computing power closer to users and devices; MEC platforms enable service hosting at the network edge. – Cloud-native architectures at the edge: Containers and lightweight orchestrators (like edge-oriented Kubernetes) enable consistent deployment and management of edge workloads. – 5G and wide-area networks: High-bandwidth, low-latency networks enable real-time edge processing; SD-WAN and NFV connect edge sites to the cloud. – Edge AI and on-device inference: AI models at the edge provide real-time insights with privacy and low latency. – Data orchestration and synchronization: Coordination with the cloud for data consistency and governance. – Security and identity at the edge: Zero-trust, secure boot, encryption, and continuous monitoring. |

| Benefits for fast, reliable apps | – Reduced latency and improved UX: Local processing minimizes round-trips to the cloud for near-instant responses. – Enhanced reliability: Edge can operate offline or with degraded cloud connectivity; data syncs when connectivity returns. – Bandwidth optimization and cost savings: Filtering/aggregating data at the edge reduces upstream cloud traffic. – Data residency and compliance: Local processing helps meet regulatory data requirements. – Contextual and personalized experiences: Local context enables faster, tailored content. |

| Architectural patterns | – Cloud-to-edge continuum: Cloud handles heavy analytics and governance; edge runs latency-sensitive services and offline-capable logic; synchronized intelligently. – Hybrid cloud and edge deployments: Workloads span multiple clouds with edge at regional sites. – Distributed data stores and caching: Edge caches data locally and syncs with cloud; event streaming ensures updates. – Event-driven architectures at the edge: Lightweight event buses trigger functions as real-time signals arrive. – Edge mesh and service discovery: Consistent discovery and observability across edge sites. |

| Real-world benefits by industry | – Gaming and immersive experiences: Low-latency, AR/VR-ready gameplay near players. – Real-time analytics and monitoring: Industrial IoT and smart cities rely on edge analytics. – Retail and customer engagement: Real-time offers and inventory checks at the edge. – Healthcare and privacy-first applications: Local processing reduces data exposure; cloud handles long-term storage. – Autonomous systems and robotics: On-device perception and control. |

| Challenges and considerations | – Security at the edge: Expands the security perimeter; requires zero-trust, encryption, secure boot, and monitoring. – Management and orchestration complexity: Scalable tools are needed for many edge sites. – Data governance and synchronization: Balancing local processing with cloud governance; clear residency and sync policies. – Cost and total cost of ownership: Capex/Opex considerations for edge resources. – Skill and organizational alignment: New roles and training for edge design and observability. |

| Implementation guidance | – Start with workload selection: Target latency-sensitive/bandwidth-intensive workloads. – Map data flows and dependencies: Decide edge vs cloud processing and data residency. – Choose appropriate edge platforms: MEC, micro data centers, edge Kubernetes, or serverless options. – Governance and observability: Centralized telemetry with local edge visibility; consistent logs/traces/metrics. – Security and compliance: Identity, access, encryption, secure software supply chain. – Pilot and scale: Begin with a scoped pilot; measure latency and reliability; expand iteratively. – Talent and partnerships: Leverage providers and integrators with edge-native tooling and best practices. |

| Future outlook | – Edge AI and deeper integration: AI at the edge becomes more capable and ubiquitous. – More sophisticated orchestration: Managing distributed workloads will improve. – Standardized patterns: Easier deployment and operation of cloud-to-edge architectures. – Expansion of the continuum: Storage, security, and automation extend beyond compute, enabling smarter, more responsive apps across industries. |